Home / Blog / What is Generative Engine Optimization (GEO)? Guide for 2025

AI seo

What is Generative Engine Optimization (GEO)? Guide for 2025

Published: August 22, 2025

Share on LinkedIn Share on Twitter Share on Facebook Click to print Click to copy url

Contents Overview

Generative Engine Optimization (GEO) is the emerging practice of optimizing content so it is visible, referenced, and cited within AI-generated answers from systems like Google’s AI Overviews, Google’s AI Mode, or ChatGPT—rather than just ranking in traditional search engine results (SERPs). Unlike older methods of SEO, which focus on climbing SERPs through keywords and backlinks, GEO emphasizes creating factual, authoritative, semantically rich, and well-structured content that large language models (LLMs) can easily interpret.

Over the past five to seven years, LLMs have advanced to the point where they capture fine-grained statistical, syntactic, and semantic information from text. Early models like BERT demonstrated that transformers could handle syntactic tasks (such as parsing sentence structure) while excelling at semantic tasks like natural language understanding and textual entailment—capabilities that laid the foundation for today’s generative models. These systems now go beyond classification to generate coherent, context-aware responses, grounding their outputs in fact-dense and semantically structured sources.

The goal of Generative Engine Optimization (GEO) is not only to appear in search results, but to become part of the synthesized response itself, ensuring that your brand or content is directly referenced by AI. This requires strategies such as publishing comprehensive, credible content with clear structure, incorporating citations and data, optimizing for conversational queries and user intent, building brand authority through mentions, and maintaining technical accessibility for AI crawlers.

Key Takeaways

- How GEO is different from traditional SEO: Traditional SEO is about ranking higher on Google’s SERPs through keywords, backlinks, and technical optimization (considered “older” SEO methods). GEO, on the other hand, focuses on making your content retrievable, re-rankable, and reference-worthy inside AI-generated answers from tools like Google’s AI Overviews, AI Mode, or ChatGPT. Instead of optimizing only for clicks, GEO ensures your brand is cited and trusted within the AI’s response itself—a more direct influence on user decisions.

- Content as the cornerstone of Generative Engine Optimization (GEO): Content with high semantic density—such as listicles, guides, FAQs, and deep informational resources—performs best. These formats cover broad topic clusters, incorporate precise terminology, and provide structured, modular sections that LLMs can easily extract and cite. In GEO, success depends on publishing fact-dense, authoritative, and well-structured content that maps to conversational queries and buyer intent, making your brand part of the AI-driven discovery process.

- Measuring Generative Engine Optimization (GEO) Impact: Clicks and traffic alone don’t capture GEO’s impact. Enterprises need new KPIs such as AI Visibility Rate (AIGVR), Citation Rate, Content Extraction Rate (CER), and Conversation-to-Conversion Rate. The most accurate way to track these is through log file analysis, which reveals when ChatGPT or other AI tools pull your content into answers—data missed by GA4. For enterprises, custom technology that maps AI citations to conversions will become essential for proving ROI in an AI-first search world.

How Generative Engine Optimization (GEO) Works

Generative Engine Optimization (GEO) is not just about placing the right keywords into your content, it’s about ensuring your material is retrievable, re-rankable, and reference-worthy in AI-generated results. Unlike traditional SEO, which optimizes for search engines’ ranking links, GEO adapts to how large language models (LLMs) like ChatGPT or Google’s AI Overviews and AI Mode fetch, filter, and synthesize answers. Below are the key layers that define how GEO works, supported by recent findings and patents.

Want to skip all of this? Have the Go Fish team of experts give you an AI Search audit.

1. Retrieval and Reranking Models

At the core of systems like ChatGPT is a multi-stage retrieval pipeline. First, a broad set of documents is retrieved, but visibility doesn’t guarantee inclusion in the final answer. A reranking model, like ‘ret-rr-skysight-v3’ in ChatGPT, reorders sources based on quality and authority signals before synthesis.

Here’s what you would do to perform Generative Engine Optimization (GEO) against these findings:

- Comprehensiveness and authority matter most. Shallow or low-trust content is unlikely to survive reranking.

- Consistency in factual presentation ensures AI models interpret the content as reliable.

2. Freshness as a Ranking Signal

Our findings confirm that systems like ChatGPT actively use a freshness scoring profile, meaning recent updates are heavily weighted. A highly detailed but outdated piece of content can lose ground to newer, less comprehensive material.

Here’s what you would do to perform Generative Engine Optimization (GEO) against these findings:

- Regularly update cornerstone content to preserve visibility.

- Add timestamps, revision histories, and structured metadata so AI models can clearly detect recency.

3. Query Intent Detection

Modern generative systems don’t just keyword match, they analyze user intent with mechanisms like ‘enable_query_intent: true’ processors (found in ChatGPT). This aligns with patents describing context-aware query classification (US9449105B1).

Here’s what you would do to perform Generative Engine Optimization (GEO) against these findings:

- Content should be purpose-driven (e.g., definition pages for definitions, how-to guides for tasks, comparison tables for evaluations).

- Pages that misalign with intent risk exclusion, even if they rank well traditionally.

4. Vocabulary-Aware Search and Domain Authority

With the finding of the ‘vocabulary_search_enabled: true’ processor inside ChatGPT, AI models prioritize content that uses domain-specific terminology accurately and consistently. This aligns with Google’s MUM (Multitask Unified Model), designed to better interpret nuanced language across domains (Google MUM blog).

Here’s what you would do to perform Generative Engine Optimization (GEO) against these findings:

- Use precise, industry-specific terms that demonstrate expertise.

- Provide definitions, glossaries, and schema markup so models recognize and contextualize terminology.

5. Source-Specific Scoring and Connected Data

Our findings show AI retrieval systems apply different scoring weights to public web vs. private sources (Google Drive, Dropbox, Notion). Public content undergoes full reranking, while trusted connected sources are scored more lightly. This means:

- For brand content on the public web, optimize for depth, credibility, and structure to pass strict rerank filters.

- For internal enterprise content (e.g., knowledge bases), ensure technical accessibility so AI can parse it easily.

Related Patent: Generating query answers from a user’s history — US20250036621A1

6. Query Fan-Out and Grounding

A defining feature of AI-powered search is query fan-out: when a user enters a question, the model doesn’t just run a single query. Instead, it generates multiple related sub-queries, each designed to capture different angles, entities, or contexts around the original request. This process allows the system to cast a wider net of retrieval before synthesizing an answer.

- Query Expansion: As described in Google’s patent on retrieval of information using trained neural networks (US11769017B1), the system can expand user input into semantically similar or contextually relevant variations. This means optimization isn’t just about ranking for one phrase — your content should cover a cluster of semantically adjacent queries.

- Contextual Grounding: Google’s stateful response patent (WO2024064249A1) and context-aware query classification patent (US9449105B1) emphasize grounding answers in relevant, high-trust contexts, including a user’s past interactions or domain-specific signals. Grounding is how the model selects authoritative snippets to “anchor” its generated response. If your content is structured, cited, and contextually rich, it’s more likely to become part of that anchor set.

- Domain Coverage: Google’s MUM framework (Introducing MUM) illustrates how LLMs use multimodal, multilingual, and cross-domain understanding to ground answers across diverse sources. This further reinforces the need for content that’s comprehensive and interlinked across related topics, so it can surface when fan-out explores adjacencies.

Here’s what it means to optimize for Generative Engine Optimization (GEO) against these findings:

- Optimize for query clusters, not just single keywords: AI models expand user queries into multiple variations, so content must cover semantic adjacencies, synonyms, and related entities.

- Create grounding-ready content: Include citations, structured data, and verifiable facts so your pages can serve as authoritative “anchors” when models synthesize answers.

- Support multiple user intents: Structure content to satisfy definitions, comparisons, how-tos, and other likely fan-out interpretations.

- Build semantic ecosystems: Interlink related pages and use schema markup to show contextual relationships, making it easier for AI to ground responses in your site.

- Prioritize trust and coverage over narrow keyword targeting: Comprehensive, high-authority content is more likely to be selected as a grounding source across diverse fan-out queries.

A Generative Engine Optimization (GEO) Strategy Framework

A GEO strategy is designed to maximize visibility within AI-generated results by aligning content creation and optimization with how retrieval systems, rerankers, and large language models (LLMs) operate. Unlike traditional SEO, GEO is less about climbing SERPs and more about ensuring content is retrievable, re-rankable, and reference-worthy when AI synthesizes an answer.

1. Passage-Level Optimization

AI models frequently retrieve and rank information at the passage level, not just the page level. This means individual sections, FAQs, or tables of data can be cited independently of the entire article.

- Write modular content blocks (clear subheadings, scannable sections, structured FAQs) that can stand alone.

- Use semantic markup (schema, headings, lists, tables) to make passages machine-readable.

- Ensure each section delivers a complete, factual answer that could be extracted and cited directly.

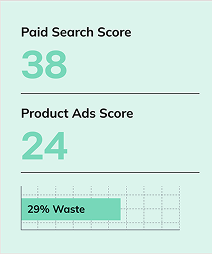

We’re so confident in how much this impacts AI Search, Go Fish created custom technology called Barracuda, that helps optimize pages on a passage-by-passage level for highest impact opportunities to influence LLMs. See Barracuda in action below, allowing fast analysis of passage or “articles” to measure clarity, fact-density, and semantic relevance all-in-one:

2. Freshness and Continuous Updates

Generative systems apply a freshness scoring profile, meaning recency is an active ranking signal. Even authoritative evergreen content can lose relevance if not updated.

- Regularly refresh cornerstone pages with new data, stats, and references.

- Maintain clear timestamps and revision logs for transparency.

- Build a cadence of incremental updates instead of one-off static publishing.

3. Semantic Footprint Expansion

Because of query fan-out, LLMs expand a user query into clusters of related variations. Content must grow beyond exact keywords and cover adjacent concepts to maximize retrieval opportunities.

- Target clusters of related queries rather than single terms.

- Expand coverage with supporting pages, informational content, and context-rich internal links.

- Focus on entity-driven content (people, products, processes, industries) to grow topical depth.

- Use schema markup to tie entities and relationships together, reinforcing context.

4. Intent-First Structuring

With query intent detection active, AI models map a query to the type of answer needed (definition, how-to, comparison). Misaligned content risks exclusion.

- Publish purpose-specific formats: guides, checklists, product comparisons, FAQs, explainer articles.

- Label and structure content so intent is unmistakable to both readers and crawlers.

- Provide direct answers up front, then expand with supporting detail for depth.

5. Grounding and Authority Signals

Grounding ensures that generated answers are anchored in authoritative, verifiable sources. Content must project trust and credibility to be selected.

- Include citations, data, and external references from authoritative publications.

- Establish brand mentions across the web to strengthen perceived authority against your own entity footprint.

- Use clear, consistent factual statements—avoid ambiguity that may reduce trust.

6. Vocabulary and Domain Expertise

With vocabulary-aware retrieval, LLMs favor content that uses accurate, domain-specific terminology.

- Incorporate industry language and entity references naturally to expand entity-density and fact-density.

- Provide glossaries and definitions to signal expertise and help with cross-domain understanding.

- Align content with Google’s MUM-style interpretation, which values nuance across languages and modalities.

Generative Engine Optimization (GEO) vs. Traditional SEO Comparison

As search evolves from traditional link-based rankings to AI-generated answers, it’s important to understand how Generative Engine Optimization (GEO) differs from classic SEO. While SEO focuses on ranking pages in search engine results, GEO is about making content retrievable, re-rankable, and reference-worthy within AI-driven responses. The table below highlights the key differences in goals, tactics, and success measures between SEO and Generative Engine Optimization (GEO):

| Comparison | Traditional SEO | Generative Engine Optimization (GEO) |

|---|---|---|

| Primary Goal | Rank high on search engine results pages (SERPs) to drive organic traffic. | Be retrieved, reranked, and cited in AI-generated answers (Google AI Overviews, AI Mode, ChatGPT). |

| Optimization Level | Page-level optimization (title tags, meta descriptions, keywords). | Passage-level optimization: individual sections, FAQs, or tables must stand alone as authoritative, extractable answers. |

| Content Strategy | Focus on keyword targeting and backlink acquisition. | Focus on semantic coverage: expand topical depth, cover related entities, synonyms, and adjacent queries to grow a semantic footprint. |

| Freshness | Evergreen content can sustain rankings with minor updates. | Freshness scoring is always on—newer content is prioritized; cornerstone assets must be updated frequently with timestamps and revision logs. |

| Query Handling | Matches queries to pages through keyword relevance and link equity. | Models use query fan-out (multiple sub-queries) and grounding in high-trust contexts, requiring broader content clusters and authoritative anchors. |

| Intent Alignment | Optimizes for transactional, navigational, or informational queries in SERPs. | Content must be intent-first structured—clear formats for definitions, comparisons, how-tos; misaligned content risks exclusion. |

| Authority Signals | Backlinks, domain authority, and traffic metrics. | Grounding-ready signals: citations, statistics, brand mentions, and factual consistency to become an authoritative anchor source. |

| Vocabulary & Domain Expertise | Keywords and partial synonyms are sufficient for SERP rankings. | Vocabulary-aware search prioritizes precise industry terminology, entity-rich writing, and schema-supported definitions. |

| Technical Factors | Crawlability, site speed, mobile optimization, structured data. | Same technical factors apply, but with added emphasis on machine interpretability for LLMs (structured data, modular content, clarity). |

| Measurement of Success | Higher rankings, traffic, click-through rates. | Inclusion and citation in AI-generated answers; visibility across AI ecosystems rather than just SERPs. |

Measuring AI Search Presence: KPIs and Enterprise Challenges

As AI search becomes a primary channel for discovery, enterprises face a new challenge: measuring visibility when users never touch the SERP or even click through to a website. Traditional analytics platforms like GA4 miss these interactions entirely, creating a blind spot around how often content is being cited in ChatGPT, Google’s AI Mode, or other LLM-driven experiences.

Why Traditional Analytics Fall Short

The rise of conversational AI tools like ChatGPT and Google’s AI Mode has created a massive visibility blind spot for enterprises and mid-market companies (and arguably, almost all companies). When your content is cited inside an AI-generated answer, users may never click through to your site in a traditional way. This means platforms like GA4 or Adobe Analytics, which rely on JavaScript events, don’t capture the true footprint of your content in AI-driven discovery.

The result: companies may be underestimating how often their brand is referenced in LLM conversations and missing critical insight into their role in the modern buyer journey.

Log File Analysis: The Missing Lens

Your server log files are the most reliable record of AI-driven usage. Unlike JavaScript analytics, logs capture every request made to your server, including the ChatGPT-User agent, which indicates that a real user’s question triggered ChatGPT to pull from your site.

Key benefits of log analysis for AI Search measurement:

- Identify which pages are cited in real conversations by isolating ChatGPT-User visits.

- Separate AI citations from crawler activity, distinguishing between GPT indexing and live user prompts.

- Quantify hidden demand by tracking requests invisible to GA4 (in one case, 490 ChatGPT references vs. only 3 GA4 sessions in the same period).

- Prioritize high-value topics by analyzing which URLs are consistently cited in AI answers.

This approach transforms raw logs into visibility metrics that reflect your true role in the AI search ecosystem.

New KPI: Conversation to Conversion

To measure impact in AI Search, we need to move beyond rankings and sessions to a Conversation to Conversion metric:

- Conversation: Any instance where your content is cited in an LLM answer, evidenced by a ChatGPT-User log entry.

- Conversion: The measurable outcome (lead, form fill, purchase) from a user who engaged after seeing your brand in an AI conversation.

By mapping log file activity to CRM or analytics data, enterprises can track how often AI-driven conversations translate into business outcomes, offering a truer measure of SEO effectiveness in an LLM-first world.

Rising Need of Custom Technology for AI Search Reporting and Analytics

For global enterprises (and mid-market companies) with millions of pages and vast digital ecosystems, manual log analysis is not enough. Custom technology will become the backbone of GEO measurement and strategy:

- Automated log parsing at scale: Enterprise tools must continuously scan server logs to surface AI-driven visits in near real time.

- Passage-level detection: Identifying not just which page is cited, but which section or passage was pulled into an LLM answer.

- Semantic mapping dashboards: Visualizing clusters of content being referenced across AI queries to show your semantic footprint in AI ecosystems. Helping to quickly adjust directions toward unmet conversations, prompts, or queries.

- Integration with CRMs & attribution models: Connecting AI-driven visits to downstream revenue to calculate the true ROI of GEO efforts.

In practice, this means enterprises will need custom technology, much like they built analytics and BI stacks for traditional SEO, to measure, attribute, and scale visibility in AI Search. Those who invest early will gain an edge in understanding how conversations, not just clicks, drive conversions.

Comparing Traditional SEO KPIs and Generative Engine Optimization (GEO) KPIs

To measure this new landscape, businesses need to adopt GEO-specific KPIs that reveal how often their content is surfaced in AI conversations, how effectively it is extracted, and what business impact follows. Metrics such as AI Visibility Rate (AIGVR), Citation Rate, Content Extraction Rate (CER), and Conversation-to-Conversion Rate give enterprises a more accurate view of their influence in the buyer journey. By combining SEO and GEO KPIs, organizations can build a holistic measurement framework that captures both traditional discovery and AI-driven engagement, ultimately linking visibility in search to real business outcomes.

Here’s a comparison table of traditional SEO KPIs and new Generative Engine Optimization (GEO) KPIs that matter:

| Metric Category | Traditional SEO KPIs | Generative Engine Optimization (GEO) KPIs |

|---|---|---|

| Visibility | Organic Impressions: How often your site appears in SERPs. Share of Voice (SOV): Visibility relative to competitors in search. | AI Visibility Rate (AIGVR): Frequency and prominence of your content appearing in AI-generated answers. Citation Rate: Number of times content is quoted or referenced directly by AI systems. |

| Engagement | Click-Through Rate (CTR): % of users clicking on your SERP listing. Bounce Rate / Engagement Time: Measures user interaction depth. | Content Extraction Rate (CER): How often AI extracts and uses specific passages or data points. Passage-Level Visibility: Tracking which content blocks (FAQs, tables, snippets) are referenced by AI. |

| Conversion | Conversion Rate by Channel: % of organic sessions that convert to leads, sales, or revenue. Assisted Conversions: SEO’s contribution in multi-touch attribution. | Conversation-to-Conversion Rate: % of AI-cited content engagements that lead to a measurable business action. AI-Driven Conversion Lift: Incremental conversions traced back to AI conversations. |

| Authority & Trust | Backlink Quality & Volume: External validation of authority. Domain Authority / E-E-A-T Signals. | AI Trust Signals: How often AI models ground their answers in your brand/content. Entity Authority Score: Visibility of your brand across AI knowledge graphs and entity networks. |

| Content Performance | Ranking Distribution: % of keywords ranking in top positions. Traffic per Page / Topic Cluster. | Semantic Footprint Growth: Expansion of entity and topic coverage across AI-cited clusters. Query Fan-Out Coverage: Breadth of adjacent queries where your content is referenced. |

| Technical / Access | Core Web Vitals: Speed, stability, mobile performance. Crawl Efficiency: Search engines’ ability to index your site. | AI Crawl Indexability: Whether LLM crawlers (e.g., ChatGPT-User) can access your site. Extraction Success Rate: How easily structured content (schema, lists, tables) is parsed and reused by AI. |

Common Questions and Answers About Generative Engine Optimization (GEO):

Common questions and expert answers:

How does the zero-click search equate to Generative Engine Optimization (GEO) needs?

Zero-click search, where users get their answer directly in search results without clicking through—has been steadily increasing for years. GEO represents the next stage of that trend, as AI-generated responses often satisfy intent without the user ever visiting a website. This makes it critical for enterprises to map the buyer’s journey more precisely and focus on Conversation-to-Conversion metrics rather than just clicks.

By identifying which stages of the journey are influenced by AI references—awareness, consideration, or decision—you can create content that not only gets cited by AI engines but also drives users to take the next step toward conversion. In GEO, the emphasis shifts from earning a click to ensuring your brand is trusted and present within the conversation itself, influencing decisions at the source.

What is the objective of GEO: does it influence machines or humans?

The answer is both.

Over the last five to seven years, large language models (LLMs) have become central to natural language processing. These are massive neural networks trained on billions of words, learning to represent language by predicting words from context. Through this process, LLMs capture fine-grained statistical, syntactic, and semantic information, making them highly effective for downstream applications. Early models like BERT demonstrated that transformers could handle syntactic tasks (such as parsing sentence structure) while excelling at semantic tasks like natural language understanding and textual entailment.

Today’s generative LLMs (e.g., GPT-4, Llama 2, Gemini) go further: they don’t just classify or label—they generate coherent answers to open-ended prompts. These systems rely heavily on structured, fact-dense content and semantic signals to determine what sources to ground their outputs in. As adoption grows, this means that the first audience you must influence is the machine, because it decides what content will be cited.

That’s where Generative Engine Optimization (GEO) comes in. Its dual objective is:

- Influence machines (LLMs): Ensure your content is structured, factually rich, and semantically clear so it can be interpreted and cited.

- Influence humans: Provide authoritative, decision-focused information once that content is surfaced inside AI-generated responses.

In other words, GEO adapts SEO for a new reality: machines now act as the gatekeepers of visibility, and humans are the ultimate decision-makers.

How pivotal is content in Generative Engine Optimization (GEO)?

Content is the cornerstone of GEO. Unlike traditional SEO, where backlinks and technical signals used play a larger role (circa 2012 – 2018), GEO rewards content that is semantically dense, factually accurate, and structured for extraction. This is why listicles, guides, FAQs, and deep informational articles often carry the most weight—they cover broad semantic ground, provide rich entity relationships, and are more likely to be cited in AI-generated answers. High-value GEO content is not only comprehensive but also modular: structured in passages, tables, and lists that LLMs can easily parse and reuse. The more semantically rich your content, the greater your LLM visibility footprint—which directly improves citation frequency, extraction rates, and ultimately business impact.

What fingerprints has Google left behind to tell us the direction they’re going?

Google has left a series of clear “fingerprints” showing how its evaluation of content has shifted from external signals (like backlinks and PageRank) to content-centric and user experience–driven evaluations. The clearest example is the rollout of the Helpful Content Update (HCU) and its underlying Helpful Content System, which introduced a sitewide classifier that rewards content written for people first, not just for algorithms.

Unlike earlier systems that leaned heavily on backlinks and external authority signals, the Helpful Content processor looks inward—evaluating signals such as:

- Content Depth & Usefulness: Does the page fully satisfy intent, or does it leave users searching elsewhere?

- Page Experience & Readability: Is the content easy to navigate, clearly structured, and accessible on all devices?

- Topical Alignment: Does the content demonstrate subject-matter expertise and align with the site’s overall focus?

- Signals of People-First Writing: Content created with audience needs in mind (not just keywords), with emphasis on clarity, context, and completeness.

This fingerprint tells us Google is deprioritizing shallow, SEO-only content and aligning its systems closer to the way AI-driven models evaluate authority and relevance. In other words, Google is moving toward experience-based, semantic-rich evaluation, a direction mirrored in Generative Engine Optimization (GEO), where LLMs prioritize content that is factual, structured, and contextually authoritative rather than just externally validated.

How does Google’s Quality Rater Guidelines play a role in Generative Engine Optimization (GEO)?

The Quality Rater Guidelines often validate many of the systems that Google’s search team puts in place. Just as described in patents, the evaluation criteria emphasize fact-dense, helpful, and unique content that provides real answers to users. For GEO, this alignment is critical: AI systems like Google’s AI Overviews and ChatGPT prioritize the same qualities when selecting and citing sources. By following the principles outlined in the Quality Rater Guidelines, enterprises can ensure their content is not only optimized for traditional search but also positioned to be referenced in AI-generated responses.

How has search behavior changed with AI Search systems?

Users have always turned to Google with questions, but AI Search systems are shifting the way those questions are asked. Instead of short, keyword-based queries, users are submitting longer, more specific, and conversational prompts. This creates both a challenge and an opportunity: brands now need to expand their semantic footprint, covering broader topic clusters, related entities, and context-rich content, to ensure they’re visible in AI-generated responses. In this environment, success comes not just from ranking for keywords but from being reference-worthy in the nuanced queries that AI search presences now favor.

More on AI search from Go Fish Digital

- How to Find Pages on Your Site That ChatGPT May Be Hallucinating

- OpenAI’s Latest Patents Point Directly to Semantic SEO

- Everything an SEO Should Know About SearchGPT by OpenAI

- How to See When ChatGPT is Quoting Your Content By Analyzing Log Files

- How to Rank in ChatGPT and AI Overviews

- Top Generative Engine Optimization (GEO) Agencies

- Generative Engine Optimization Strategies (2025)

About Patrick Algrim

MORE TO EXPLORE

Related Insights

More advice and inspiration from our blog

SEO for Enterprise Businesses: Scalable Strategies that Drive Revenue

Discover how enterprise brands scale SEO with governance, automation, and cross-team...

Kimberly Anderson-Mutch| November 05, 2025

7 Ways to Use the Holidays to Build Links and Authority

Discover proven digital PR holiday strategies that earn coverage, build links,...

Kimberly Anderson-Mutch| November 04, 2025

Location-Based Search Results: How Geographic Targeting Changes Google Rankings

A comprehensive study revealing how Google’s location-based algorithm personalizes search results...

Dan Hinckley| October 24, 2025